Google's Soli chip seems to have so much wasted potential.

When Google first demonstrated its ATAP group's Project Soli concept, almost everyone who saw it was not only impressed but sure that this was going to be part of the future of the user interface. I can vividly remember my own excitement because this was something completely different and seemed like it was straight out of a movie. I couldn't wait to see Soli actually inside a Google hardware product.

And then when it did happen on the Pixel 4, it was absolutely underwhelming. Sure, having hands-free controls that actually worked for your music player or to silence a call was a thing, but Soli's best feature was sensing your hand as you reached for the phone so it could start the facial recognition routine to unlock your screen. It worked but was a lot less spectacular than the original demonstration and hardly made the Pixel 4 one of the best Android phones.

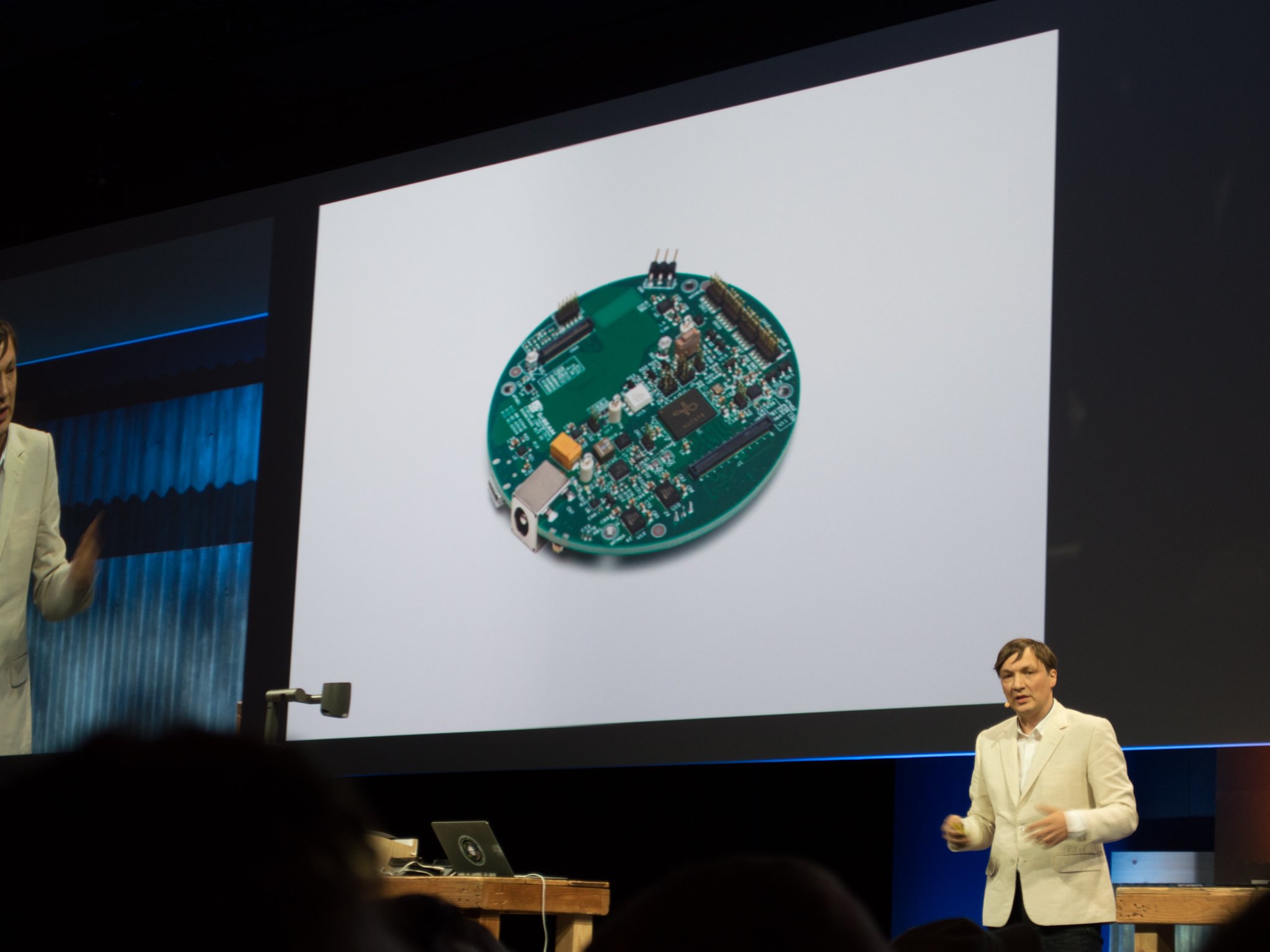

Soli isn't a new idea, but it takes one we're all familiar with and refines it into something that can live inside a piece of consumer electronics like the Pixel 4 or the newly announced Google Nest Hub. It uses radar to detect very fine and minute movements that software can use to control something the same as a tap or long-press on your screen does. In the words of the founder of the project, Ivan Poupyrev, "It blows your mind, usually, when you see things people do. That's what I'm really looking forward to. I'm really looking forward to releasing this to the developer community."

What Soli can do and what it actually does are two very different things.

Unfortunately, what it can do and what it actually does so far are worlds apart. A product that was able to be used to navigate through an entire user interface and perform any action you need it to perform, complete with its own set of universal gestures was released as a way to skip a song in your music player, and I was very disappointed. Even the Soli Sandbox app showed the potential a lot better than Google's own use.

Using Soli to make sure you're not heating an empty house with a Nest Thermostat is a great idea that will save you money, but it's not very spectacular. Unfortunately, the latest product to use a Soli chip — the new Google Nest Hub — is equally unimpressive in my opinion. Used to detect sub-millimeter movement, it adds a sleep tracking feature to the Nest Hub; and so far no other use for it has been announced or implied.

Sleep tracking can be very important for a lot of people and not everyone wants to wear a portable device that doesn't really do a very good job at it. The Google Nest Hub using Soli to track every movement will probably paint a very clear picture of sleep patterns, and as someone who has suffered from chronic insomnia throughout my life, I see the value here. But I was expecting more, like how about controlling audio you're playing through the Nest Hub with a twist of the fingers instead of yelling at Google, for example.

As disappointed as I may be, I don't think we've seen the last of Soli. We all know about how Google can be relentless when it comes to pushing a product or feature at us until we eventually accept it as the new normal because it's very likely we've all experienced something we didn't like being changed. For me, that's on-screen gestures and for you, it's probably something else that is equally annoying.

Google will keep Soli around because the idea has potential.

Soli is going to stick around because of its potential. Pie in the sky ideas like incorporating Soli into some sort of virtual laser projected keyboard aside, there are a lot of things that we would love to be able to control without touching them. Take the Chromecast, for example.

A lot of people love using a Chromecast because the phone that's always in their pocket is the remote. A lot of other folks would rather have a dedicated remote or integration into something like a Logitech Harmony universal remote and that's why the newest version ships with one. I want a little more, and I want the new Nest Hub to act as a Chromecast remote that can change volume, fast-forward, rewind, skip tracks (or commercials), and even dim my smart lamps without me touching anything except the air.

Why stop there? A Soli chip in a Nest Protect could let me know my family or pets are awake and moving, or even keep cranking up the alert volume until they are. Drop Soli into a small remote sensor as part of a security system or even better — use the Nest Hub to detect motion for security purposes. Soli doesn't only detect minute movement, after all. Or how about using Soli to translate sign language into audio so people with hearing difficulties can communicate better?

I can think of ways to use Soli so I'm sure Google is doing the same.

Maybe my biggest hope is to see Soli inside an Android Auto head unit so you never ever have to touch anything except the steering wheel while you're driving. Of course, I have no idea how feasible these ideas are or how expensive they would be to incorporate. Nobody would buy a $500 Nest Alert, after all.

I do think that Google will keep using Soli in small, seemingly inconsequential ways while it works out the next big idea though. Eventually, the company will find the use case that we really love and it can call Soli a success. Until then, we can track our sleep and skip through our music until the Pixel 4's battery dies in the middle of the day.

Source: androidcentral