While iOS 16.1 is available, iOS 16 as a whole comes packed with a variety of new features including Live Activities, iCloud Shared Photo Library, and new collaboration tools.

It’s not all about new features, though, as it also brings a huge update to iOS 15’s excellent Live Text feature that arrived last year.

The clever feature became incredibly useful since its inception, letting users grab phone numbers, addresses, and plenty more from images. The good news is that it's now even better – so with this in mind, here’s what’s new for Live Text, alongside how to use it for your iPhone in iOS 16.

What is Live Text and what’s new in iOS 16?

Live Text, in its simplest form, lets you manipulate text found within an image. For example, whether you’ve taken a photo of a restaurant menu or took a photo of a business card so you won’t forget it, Live Text will let you copy the text out and use it to send a message, create a note, use an email address, or make a call.

This is done using on-device intelligence, but that does mean that you’ll need an iPhone XS, XR or later.

It’ll also work on iPadOS 16.1, released in October 2022, so you can use the feature across your devices when needed.

However, if you have an iPhone capable of running iOS 16, the headline new feature for Live Text this year is the addition of pulling text from video content.

The idea is that if you pause, for example, an educational video, and need to pull down some of the data to add it to your notes, you can do so by pausing the video and highlighting the text.

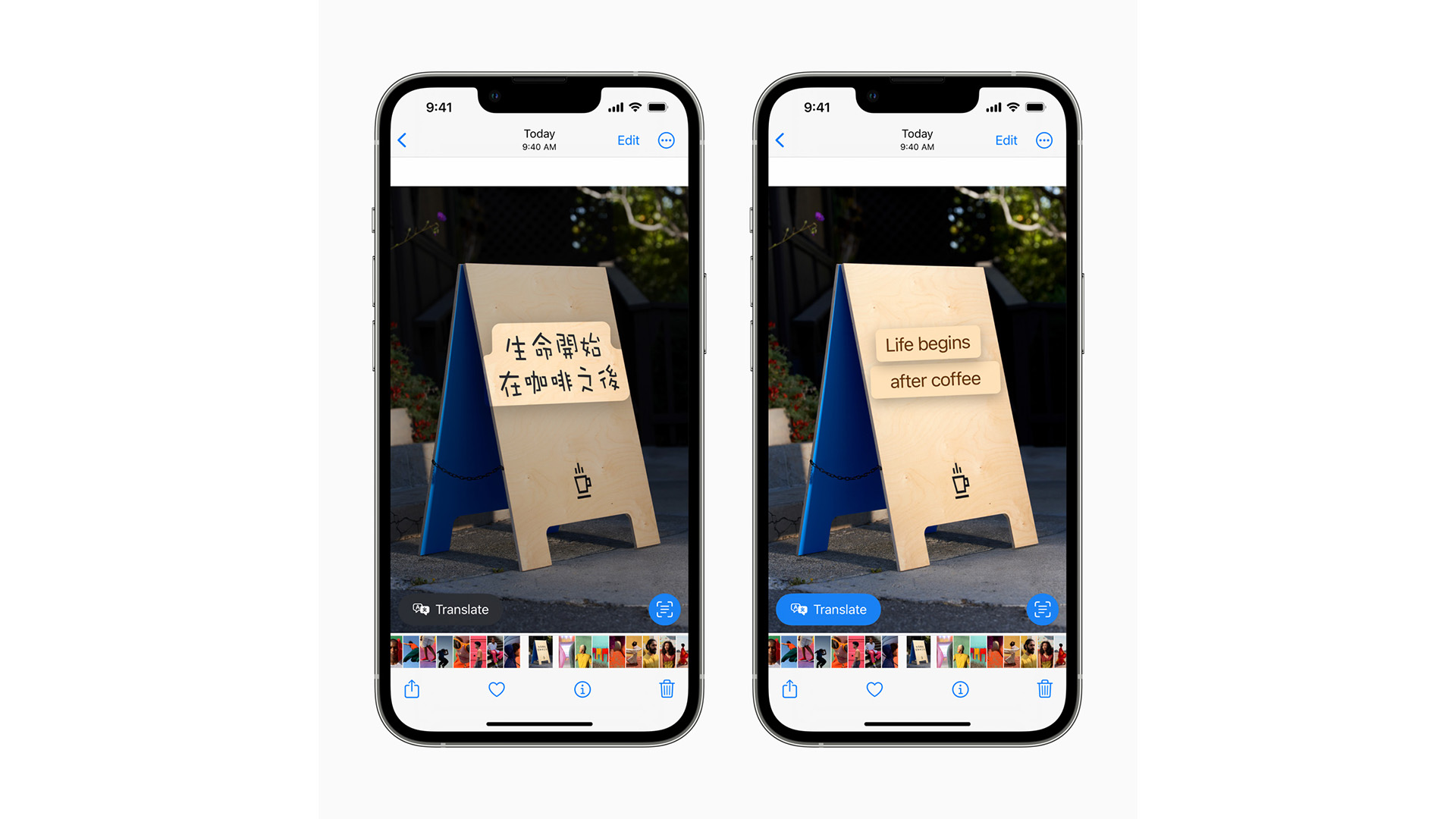

Another big new feature is the inclusion of 'Quick Actions' for Live Text. This allows you to take a photo of text in another language, for example, and instantly translate it.

Other quick actions available include starting an email when finding an email address, calling phone numbers, converting currency and plenty more.

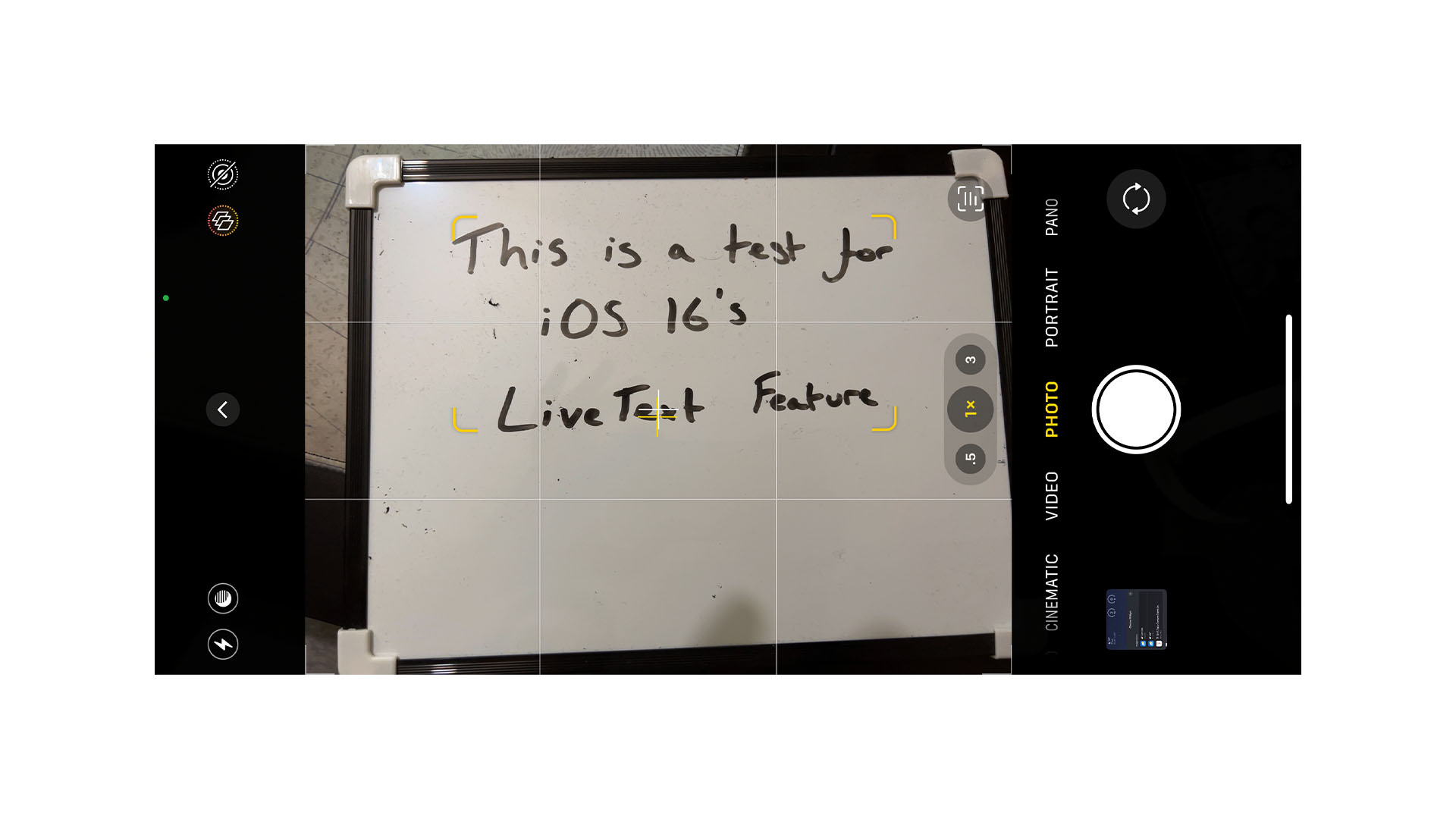

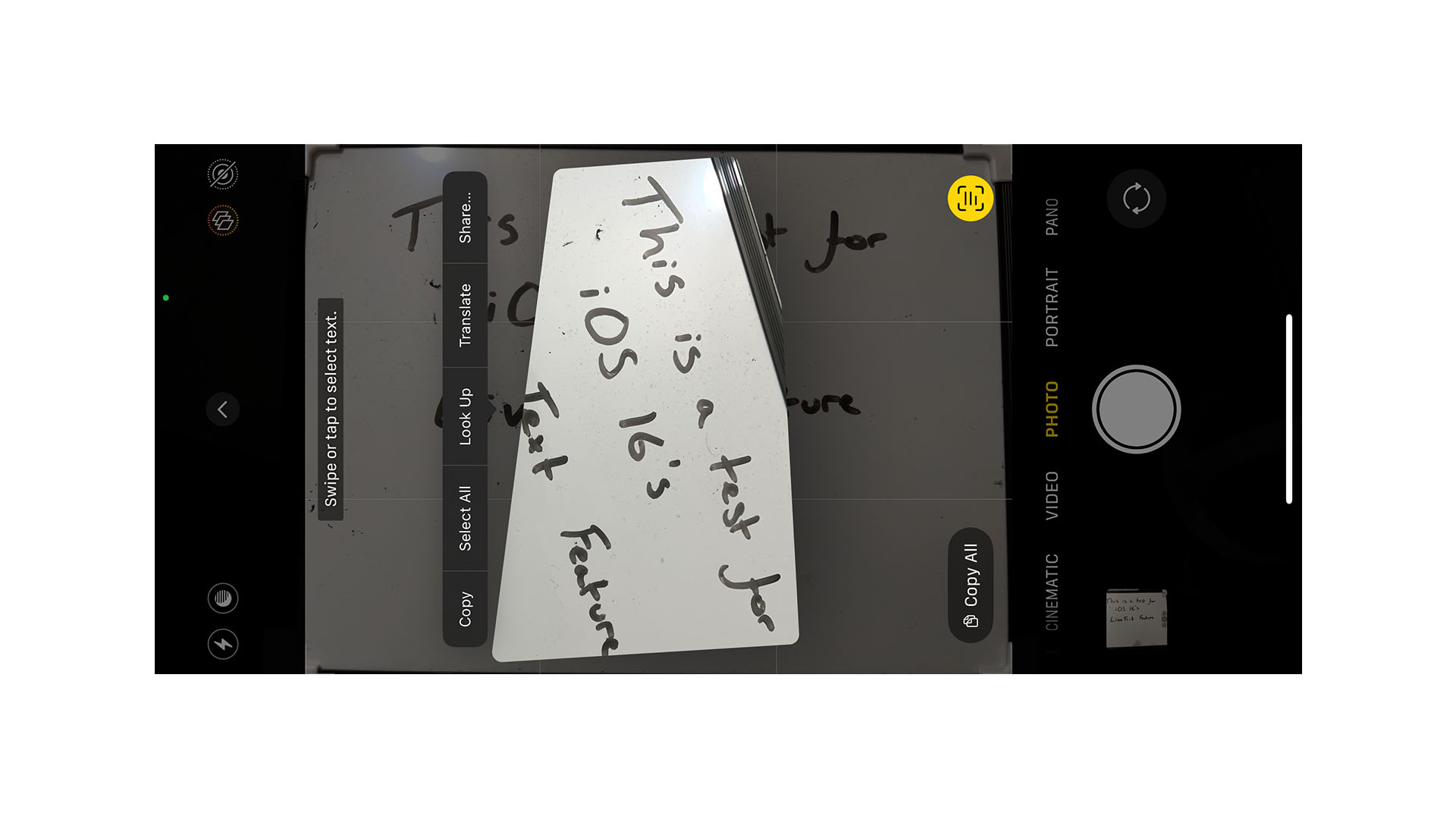

How to use Live Text in the Camera app

In the Camera app of iOS 16, hold your device so that the text is clearly visible on your screen and tap the icon that looks like a barcode scanner in the corner of the viewfinder.

Doing so brings up the Live Text overlay, and lets you highlight parts of the text shown. You can also tap the 'Copy All' Quick Action in the corner, which will copy all of the text to your clipboard so you can paste it into an app immediately.

In the example above, you can see that Live Text gets a little muddled thinking the image is upside down, but copying the text will, naturally, revert it to the correct orientation.

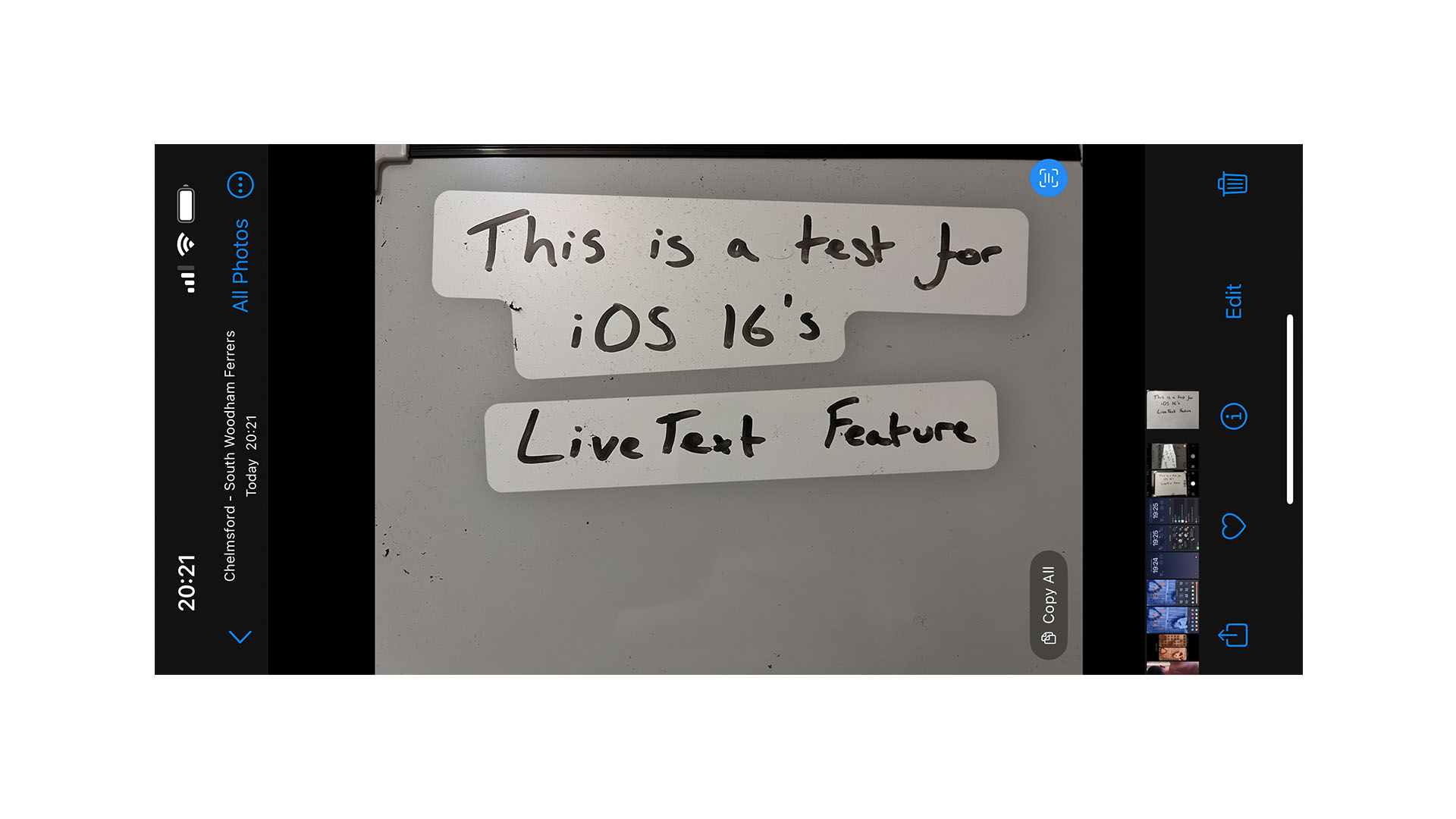

How to use Live Text in the Photos app

The same process applies to images and videos in the Photos app. Simply tap the aforementioned “Barcode” button and you’ll get the option to “Copy All” or highlight individual parts of the text.

This can be particularly helpful if you haven’t got time to hover and copy down some information – simply grab a photo or video, and come back to it in your own time.

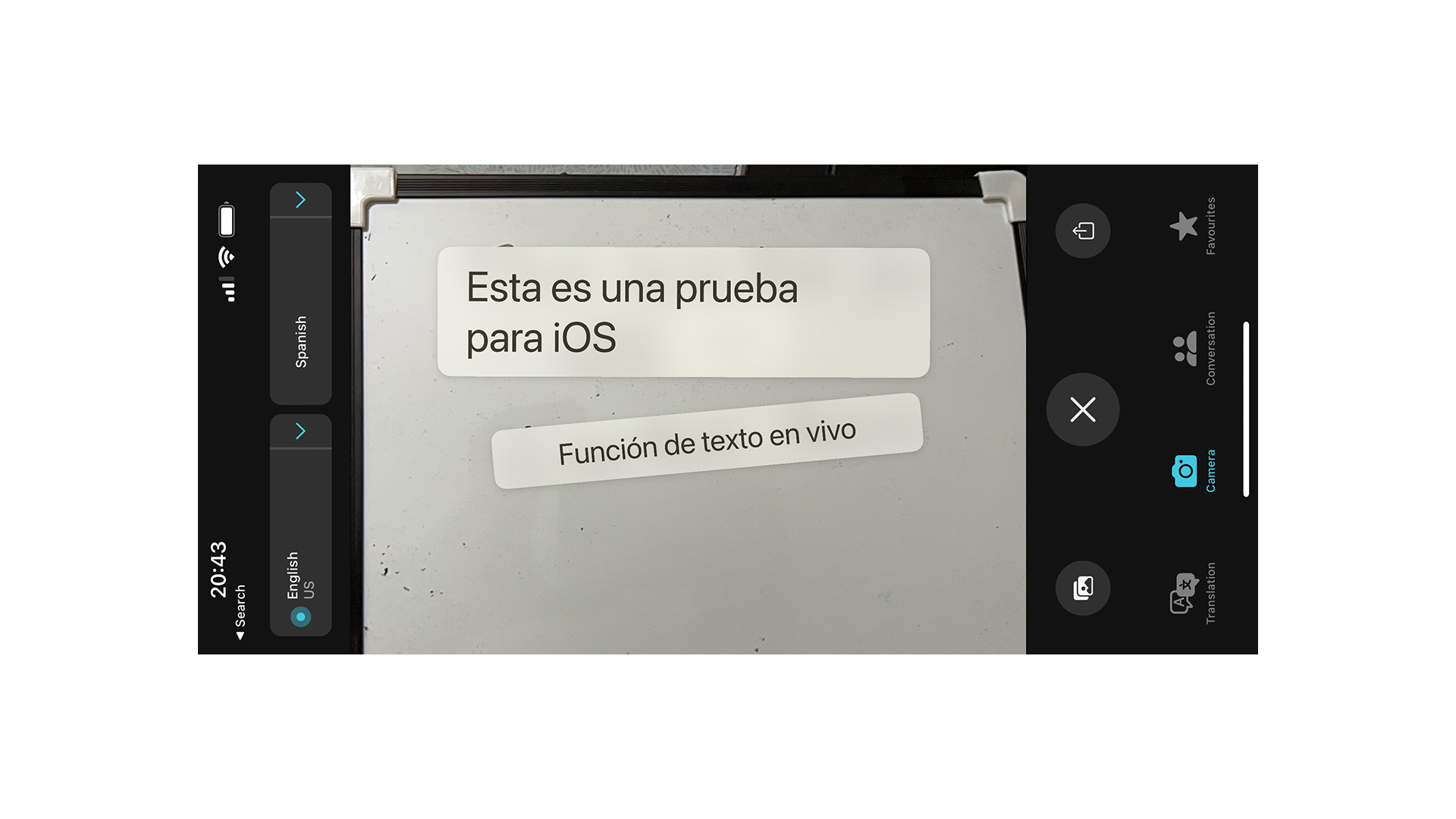

How to use Live Text in the Translate app

One of the big additions in iOS 16 is being able to access Live Text through the impressive Translate app. If you’ve not used the app before, you’ll find it in your App Library, or via a Spotlight search.

Once you’re in, there’s a new option at the bottom of the screen to open the camera. It won’t translate in real-time insofar as you’ll need to take a photo, but the app does work incredibly quickly.

You can pick the language after the fact, too, meaning if you need to translate a phrase into multiple languages, you can do so with ease.

Source: TechRadar